Quick Start Guide: Optimal Feature Selection for Regression

In this example we will use optimal feature selection on the Ailerons dataset, which addresses a control problem, namely flying a F16 aircraft. The attributes describe the status of the aeroplane, while the goal is to predict the control action on the ailerons of the aircraft.

First we load in the data and split into training and test datasets:

using CSV, DataFrames

df = CSV.read("ailerons.csv", DataFrame)13750×41 DataFrame

Row │ climbRate Sgz p q curPitch curRoll absRoll diffC ⋯

│ Int64 Int64 Float64 Float64 Float64 Float64 Int64 Int64 ⋯

───────┼────────────────────────────────────────────────────────────────────────

1 │ 2 -56 -0.33 -0.09 0.9 0.2 -11 ⋯

2 │ 470 -39 0.02 0.12 0.39 -0.6 -12

3 │ 165 4 0.14 0.14 0.78 0.4 -11

4 │ -113 5 -0.12 0.11 1.06 0.6 -10

5 │ -411 -21 -0.17 0.07 1.33 -0.6 -11 ⋯

6 │ -105 -42 0.23 -0.06 0.92 -0.6 -12

7 │ 144 -40 0.31 -0.01 0.67 0.6 -10

8 │ 249 -11 -0.38 0.06 0.56 0.4 -11

⋮ │ ⋮ ⋮ ⋮ ⋮ ⋮ ⋮ ⋮ ⋮ ⋱

13744 │ -224 -24 -0.22 0.0 0.97 0.5 -8 ⋯

13745 │ -204 -27 -0.25 0.01 0.95 0.3 -9

13746 │ 399 -22 0.17 0.2 0.36 -0.2 -9

13747 │ 237 -6 0.26 0.1 0.52 0.7 -8

13748 │ -148 -3 -0.37 0.09 0.89 0.7 -8 ⋯

13749 │ -237 -11 -0.47 -0.16 0.9 -0.4 -9

13750 │ 128 -14 -0.07 -0.11 0.5 -1.2 -10

34 columns and 13735 rows omittedX = df[:, 1:(end - 1)]

y = df.goal

(train_X, train_y), (test_X, test_y) = IAI.split_data(:regression, X, y, seed=1)Model Fitting

We will use a GridSearch to fit an OptimalFeatureSelectionRegressor:

grid = IAI.GridSearch(

IAI.OptimalFeatureSelectionRegressor(

random_seed=1,

),

sparsity=1:10,

)

IAI.fit!(grid, train_X, train_y)All Grid Results:

Row │ sparsity train_score valid_score rank_valid_score

│ Int64 Float64 Float64 Int64

─────┼──────────────────────────────────────────────────────

1 │ 1 0.49788 0.475188 10

2 │ 2 0.663576 0.668303 9

3 │ 3 0.749368 0.752557 8

4 │ 4 0.807263 0.804385 7

5 │ 5 0.812985 0.808196 6

6 │ 6 0.816881 0.810463 5

7 │ 7 0.818946 0.813062 3

8 │ 8 0.818662 0.812035 4

9 │ 9 0.819254 0.813463 1

10 │ 10 0.819036 0.81332 2

Best Params:

sparsity => 9

Best Model - Fitted OptimalFeatureSelectionRegressor:

Constant: 0.000345753

Weights:

SeTime1: -0.00703259

SeTime2: -0.00948655

SeTime3: -0.00954495

Sgz: 0

absRoll: 0.0000576494

curRoll: -0.0000862381

diffClb: -0.00000279116

diffRollRate: 0.00253189

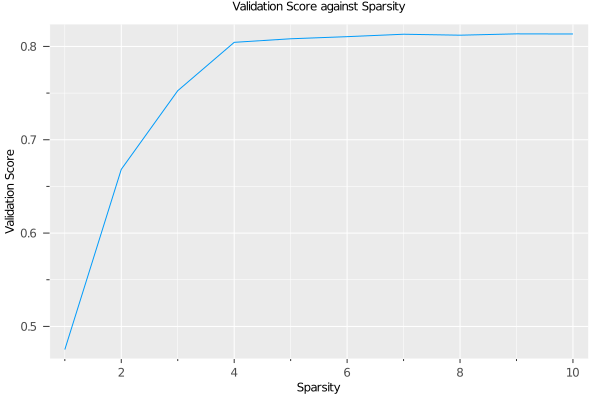

p: -0.000425322The model selected a sparsity of 9 as the best parameter, but we observe that the validation scores are close for many of the parameters. We can use the results of the grid search to explore the tradeoff between the complexity of the regression and the quality of predictions:

using Plots

plot(grid, type=:validation)

We see that the quality of the model quickly increases with additional terms until we reach 4, and then only has small increases afterwards. Depending on the application, we might decide to choose a lower sparsity for the final model than the value chosen by the grid search.

We can see the relative importance of the selected features with variable_importance:

IAI.variable_importance(IAI.get_learner(grid))40×2 DataFrame

Row │ Feature Importance

│ Symbol Float64

─────┼──────────────────────────

1 │ absRoll 0.340282

2 │ p 0.184247

3 │ curRoll 0.119241

4 │ SeTime3 0.0941509

5 │ SeTime2 0.0935782

6 │ SeTime1 0.0690623

7 │ diffRollRate 0.0481088

8 │ diffClb 0.0411191

⋮ │ ⋮ ⋮

34 │ diffSeTime4 0.0

35 │ diffSeTime5 0.0

36 │ diffSeTime6 0.0

37 │ diffSeTime7 0.0

38 │ diffSeTime8 0.0

39 │ diffSeTime9 0.0

40 │ q 0.0

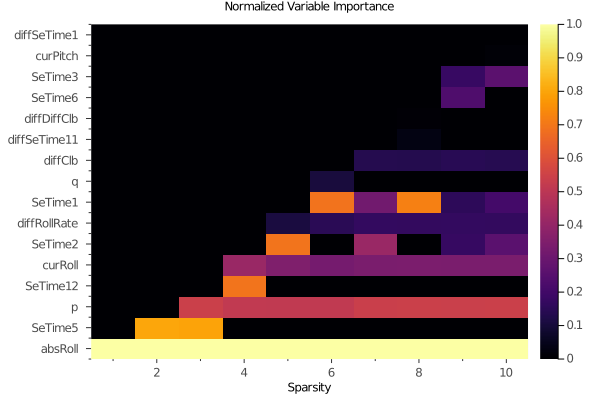

25 rows omittedWe can also look at the feature importance across all sparsity levels:

plot(grid, type=:importance)

We can make predictions on new data using predict:

IAI.predict(grid, test_X)4125-element Vector{Float64}:

-0.00103828376

-0.001249971461

-0.001207939168

-0.000808825944

-0.001157223543

-0.000977604922

-0.000829069274

-0.000773203136

-0.000932821766

-0.000835418779

⋮

-0.000699127465

-0.000697359874

-0.000538694401

-0.000537957517

-0.000952996759

-0.00113412063

-0.001219719201

-0.000770368714

-0.000979590667We can evaluate the quality of the model using score with any of the supported loss functions. For example, the $R^2$ on the training set:

IAI.score(grid, train_X, train_y, criterion=:mse)0.817629588096Or on the test set:

IAI.score(grid, test_X, test_y, criterion=:mse)0.817330661094